RDS is popularly known as AWS managed service but we would like to call it as CloudDBA managed service . We are starting this series “RDS – Managed by CloudDBA” to focus on the need for DBA in the cloud era . The cloud does not negate the need of DBAs ; They are very much needed to optimize the database environments in cloud.Please read part 1 : Managing RDS MySQL logs

Managing AWS RDS PostgreSQL logs :

Managing RDS logs is different from Native PostgreSQL in many ways .Amazon RDS for PostgreSQL generates query and error logs. RDS PostgreSQL writes autovacuum information and rds_admin actions to the error log. PostgreSQL also logs connections, disconnections, and checkpoints to the error log

In order to generate query logs you need to enable log_statement and log_min_duration_statement. Log_statement can be set to none/all/mod. None is the default which does not log any queries . ‘All’ option logs all types of queries and ‘mod’ only records DML statements. log_min_duration_statement can be used to set the cutoff time for queries . For example : If you are interested in queries taking more than 10 seconds , you can set log_min_duration_statement to 10 seconds

How to view the logs that are currently enabled and available ?

Below cli command can be executed to list the available log files for a given instance

aws --output text rds describe-db-log-files --db-instance-identifier postgres-1 --region us-east-2 DESCRIBEDBLOGFILES 1600869540000 error/postgres.log 5875 DESCRIBEDBLOGFILES 1600869540000 error/postgresql.log.2020-09-23-13 2174 DESCRIBEDBLOGFILES 1600869900000 error/postgresql.log.2020-09-23-14 654 [root@ip-172-31-35-8 ec2-user]#

Options to collect query stats ?

Below are some of the options you can enable to gather detailed query statistics :

CloudDBA In Action : What if we want to look at error logs for the last month or so ? If you use the default settings you will not be able to do that .By default, the logs are retained for 4320 minutes (three days). The max allowed value for this parameter is 10080 minutes (seven days). What if there is a production issue ; error logs are crucial for evidence gathering and they are not available now ? If you do not have dedicated DBA in cloud things like this can happen in production . A Cloud DBA/DBE can continuously fetch logs from RDS and save it to S3 or other storage with a proper retention interval matching your needs .

Please refer to our repo https://github.com/klouddb/klouddb_tools For a sample script that can be used to download logs continuously

Using pgbadger to analyze the logs :

You can run something like below to use pgbadger on your logs

./pgbadger -p “%t:%r:%u@%d:[%p]:” postgresql.log.2020-10-14-11 -o pgbadger_postgres1.html

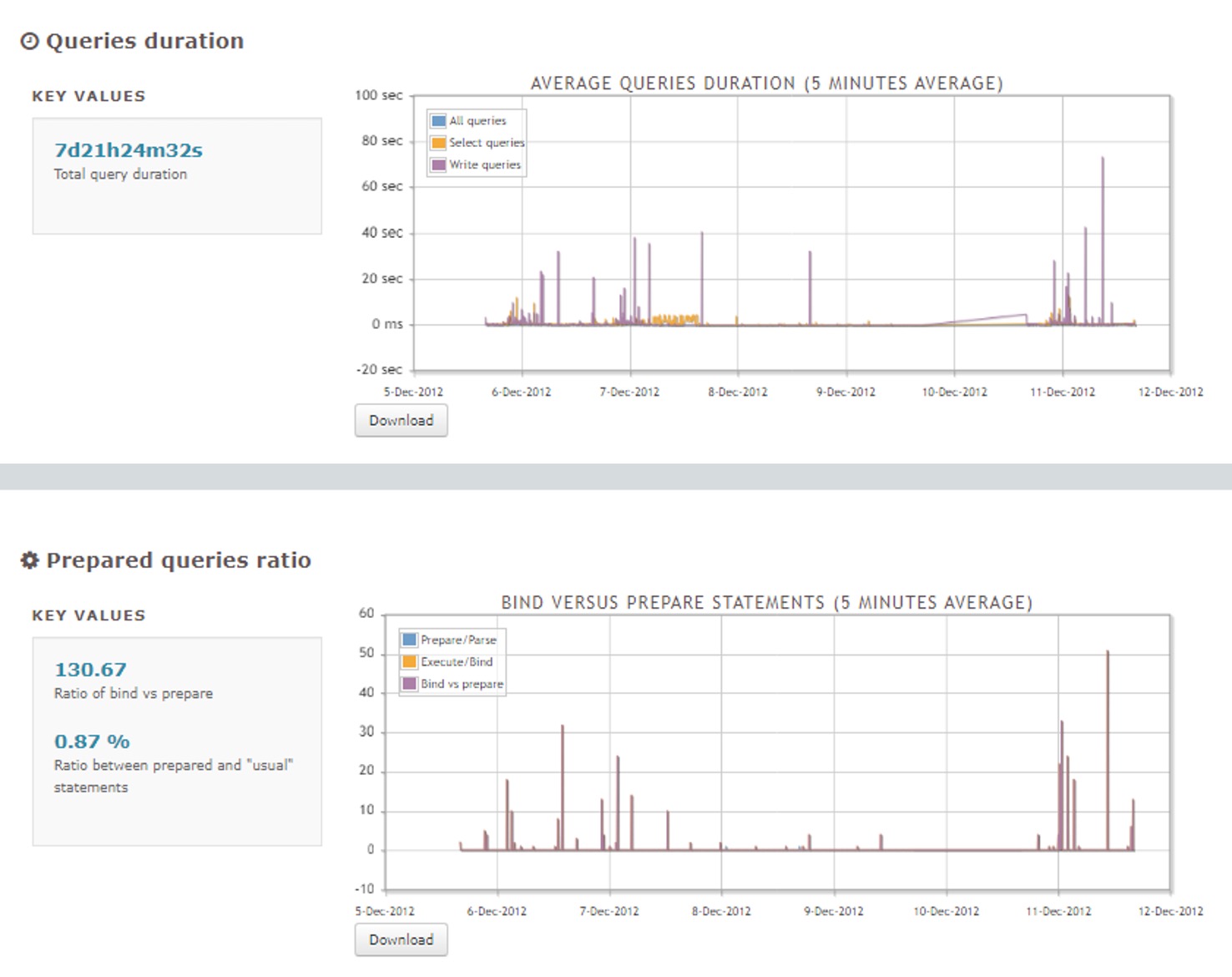

It produces a nice html report like below

CloudDBA In Action : Enabling detailed query logging could adversely impact performance . Only a cloudDBA can run benchmarking tests to validate before implementing aforementioned changes to config . CloudDBA can continuously download the logs using ourrepo https://github.com/klouddb/klouddb_toolsand get pgbadger reports as needed

How to use our Python script to download log files on a continuous basis ?

Below is a sample set of files that you can retrieve from RDS PostgreSQL :

aws --output text rds describe-db-log-files --db-instance-identifier postgres-1 --region us-east-2 DESCRIBEDBLOGFILES 1602427710000 error/postgres.log 3552 DESCRIBEDBLOGFILES 1602428370000 error/postgresql.log.2020-10-11-14 3160 DESCRIBEDBLOGFILES 1602431972000 error/postgresql.log.2020-10-11-15 4338 DESCRIBEDBLOGFILES 1602435573000 error/postgresql.log.2020-10-11-16 3912 DESCRIBEDBLOGFILES 1602439173000 error/postgresql.log.2020-10-11-17 3912 DESCRIBEDBLOGFILES 1602442774000 error/postgresql.log.2020-10-11-18 4042 DESCRIBEDBLOGFILES 1602446374000 error/postgresql.log.2020-10-11-19 3912 DESCRIBEDBLOGFILES 1602449974000 error/postgresql.log.2020-10-11-20 3912 DESCRIBEDBLOGFILES 1602453576000 error/postgresql.log.2020-10-11-21 3912 DESCRIBEDBLOGFILES 1602457177000 error/postgresql.log.2020-10-11-22 3912 DESCRIBEDBLOGFILES 1602460779000 error/postgresql.log.2020-10-11-23 3912 DESCRIBEDBLOGFILES 1602464379000 error/postgresql.log.2020-10-12-00 3912

How does it store the backups in S3

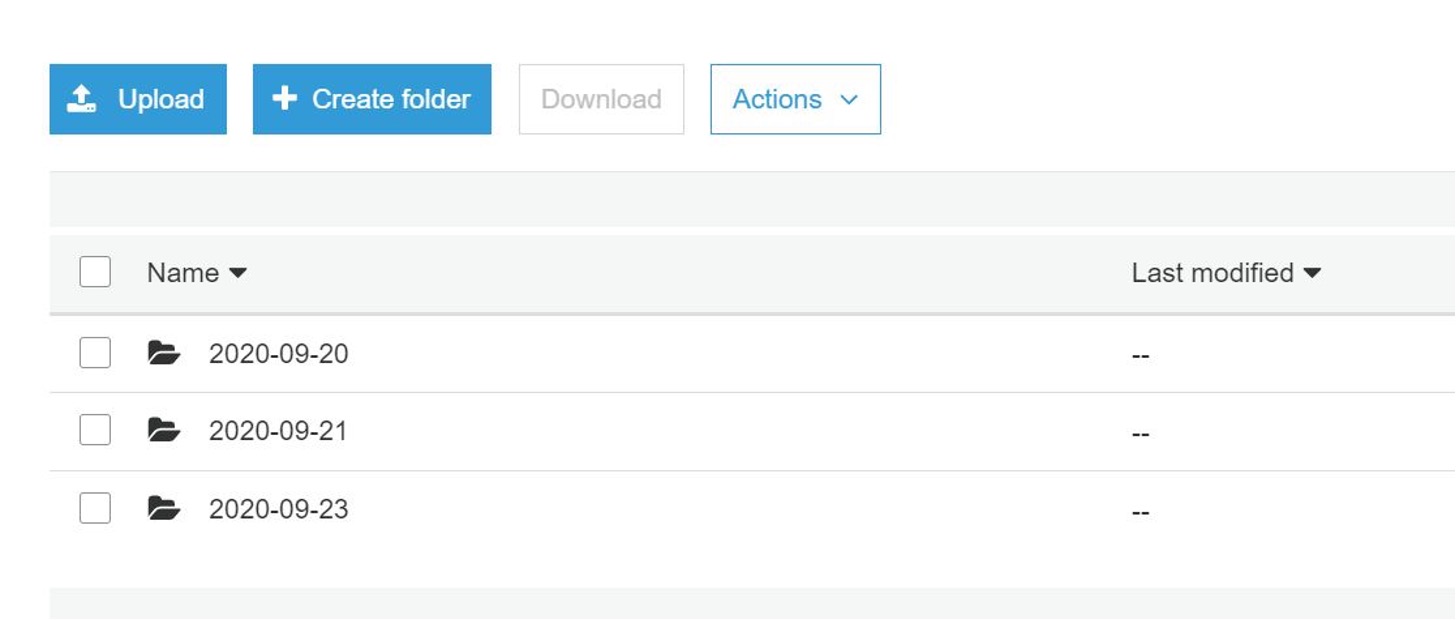

We have a separate directory per instance . For example in below example we have separate directory for instance postgres-1

Under the instance directory , we have a folder/directory by date

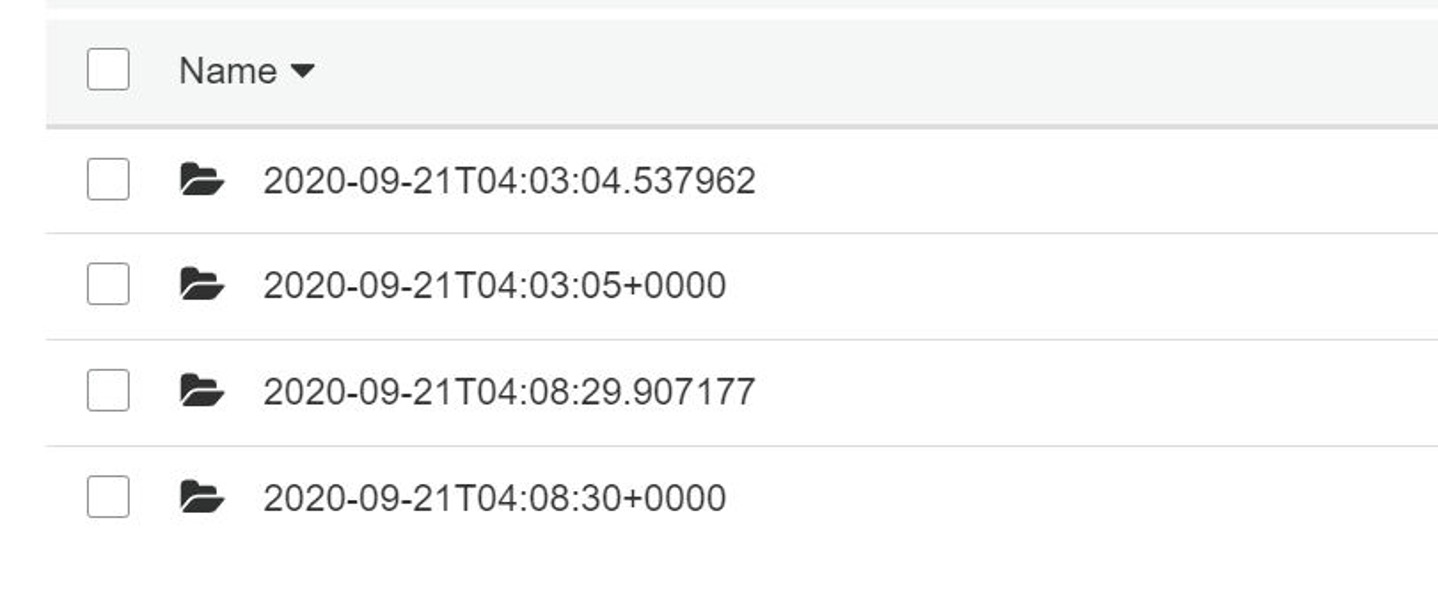

Under the date directory , we have a folder by timestamp(timestamp of the run). You will have the logs for the run under this subfolder